Self-Playing Guitar

A musical journey using the Raspberry Pi

By James

Pendergast(jcp327) and David Rusnak(dmr284).

Demonstrated on May 12, 2023.

This project was created to test the limits of a Raspberry

Pi based guitar robot. We wanted to explore how close to

normal playing we could get with what we had available in

the lab.

Demonstration Video

Introduction

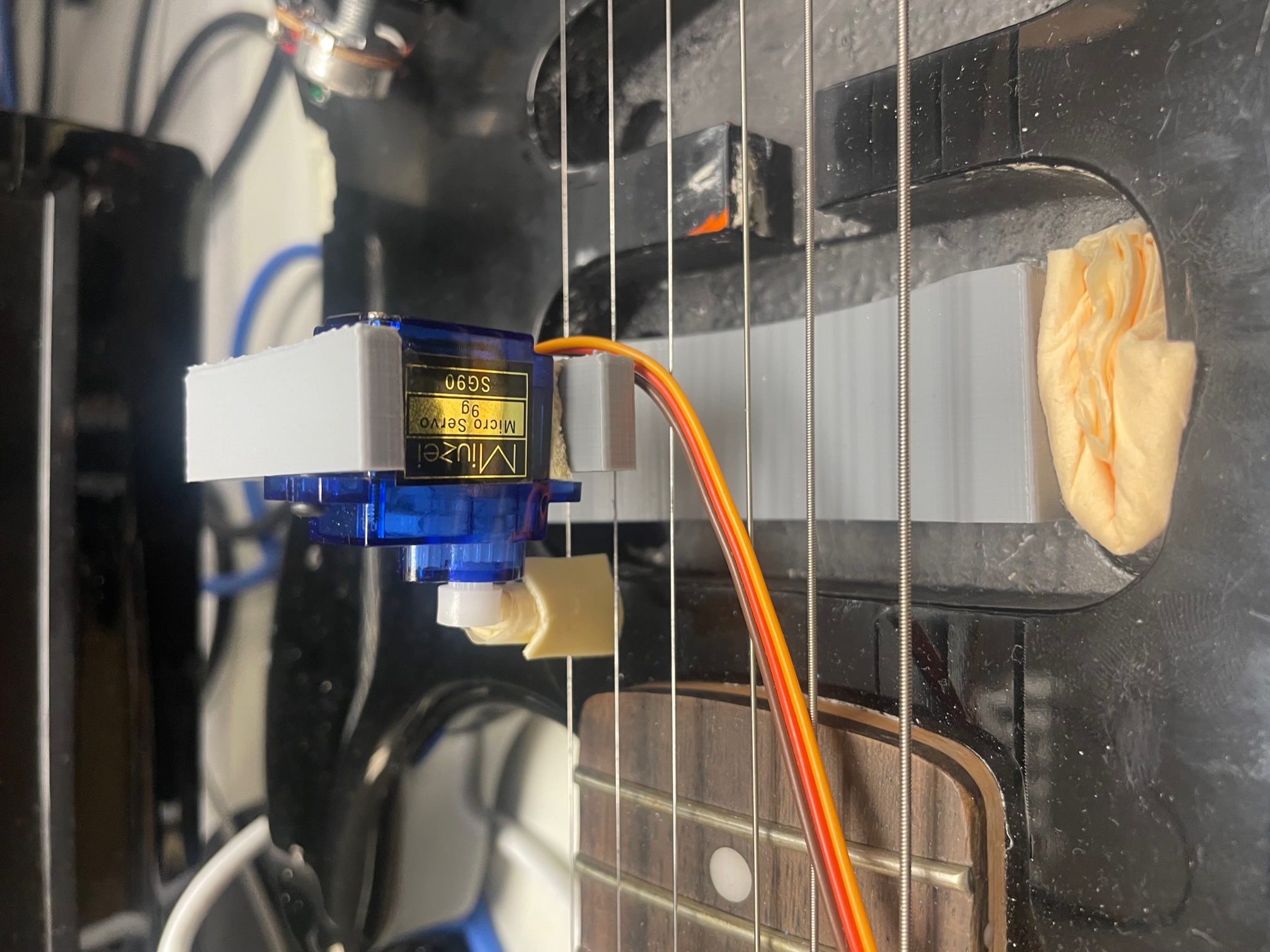

The hardware of the self-playing guitar includes an array of

12 servos that are individually positioned in their own

holders up the guitar neck. Because the servos are in their

own holders that then get attached to a wooden base, the

guitar itself is able to remain unmodified and the machine

could be placed on any guitar. The servos all line up above

the B string on the guitar. Each servo wing has a rubber

eraser on the end, mimicking a finger, which increases the

effective surface area and allows tolerances to be looser.

As each servo gets activated in the pattern of a song, the

eraser frets the guitar string at the corresponding note.

The 13th servo is placed inside a cavity for a pickup in the

guitar body. This servo has a piece of adhesive on the wing.

When the servo is activated to “pluck” the string, it

essentially hits the string with the adhesive with enough

force to slightly pull the string. Once the force that the

string has outweighs the force of the adhesive, it lets go

and the string vibrates enough for the guitar pickup to

catch the signal and send it to the amplifier.

The software that controls the servos is written in Python

and is run on a Raspberry Pi 4. The servos can be positioned

using angles, which is what we chose to use to get the most

uniform operation. There are functions for activating a

string and plucking the string that are then used in one

function that takes in one argument, a note in a song. The

note needs to include which servo needs to be activated and

the duration of the note. Once these two pieces of

information are known, the correct servo can be activated,

and the correct delay time can be used in the time.sleep()

function used to change the duration of each note. Once we

had the guitar playing basic songs, we added extra

functionality using speech recognition. The speech

recognizer waits for the user to say “Play me …. [SONG

NAME]”, searches for the song, and plays it if it is

available in the library of songs. It uses audio input from

a small USB microphone connected to the Pi. Once the song is

completed, the user is prompted to ask for another song.

Project Objective:

- Have a modular frame that could be attached to any guitar.

- Allow for a full octave of notes to be played.

- Be able to play songs quickly enough to sound like the real song.

Design

Originally, we had thought that the GPT API would work for

our purposes, so we programmed a python speech to text

script that sent the recognized speech to the GPT API as a

prompt. This way, we could modify the prompt before or after

the user inputs speech to match the kind of prompt we wanted

to have. One thing we tested (and believed worked), was

using a prompt that was formatted like this: “Tell me the

notes and note durations in the melody of [SONG NAME]”. This

seemed to work for a very small subset of songs. It would

return note names and durations which was all we needed,

since we had 12 servos on the B string, giving us access to

every note within one octave. We quickly realized that GPT

stopped working properly for other songs, so we had to

change our approach.

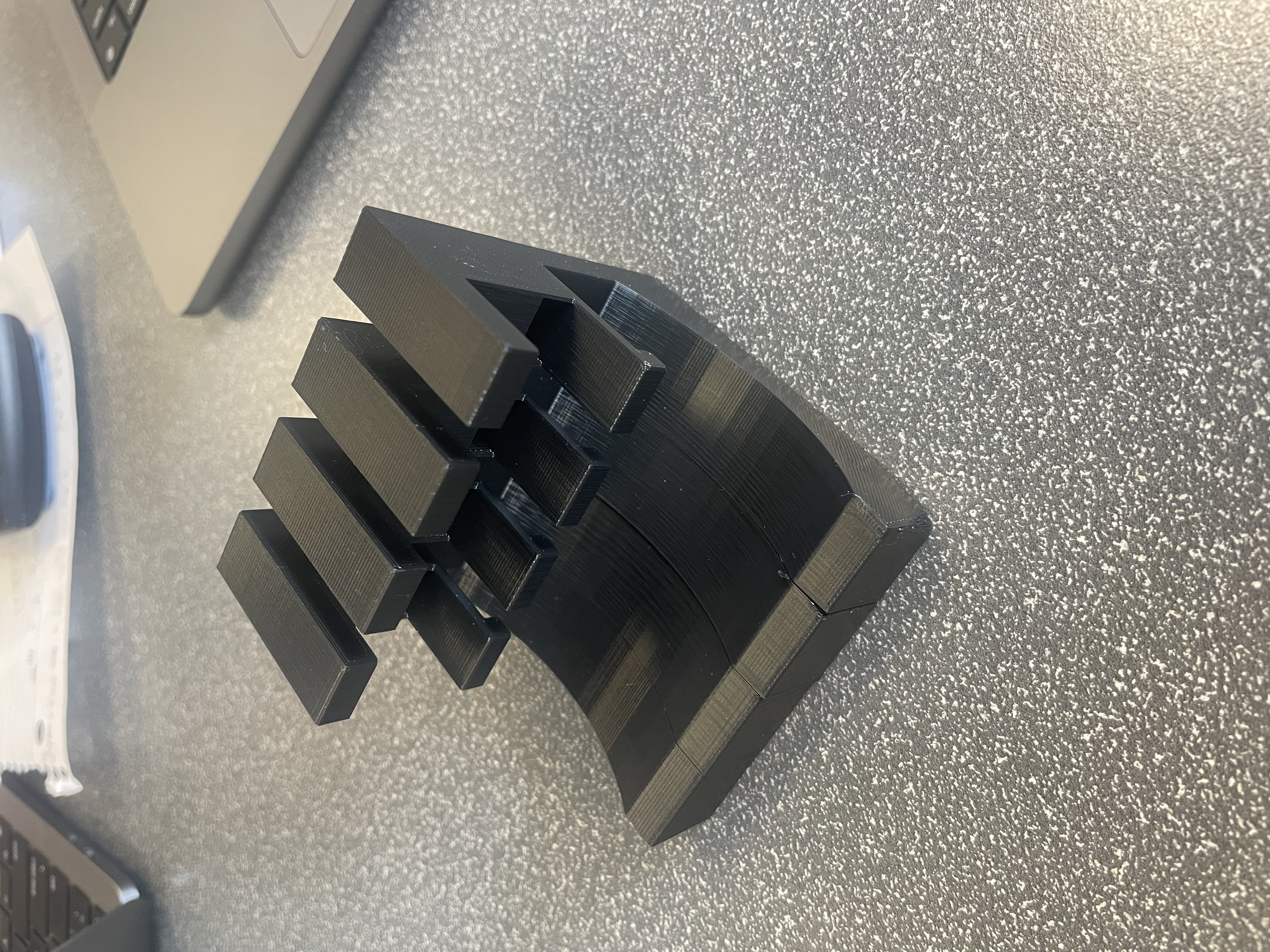

Mechanical Design

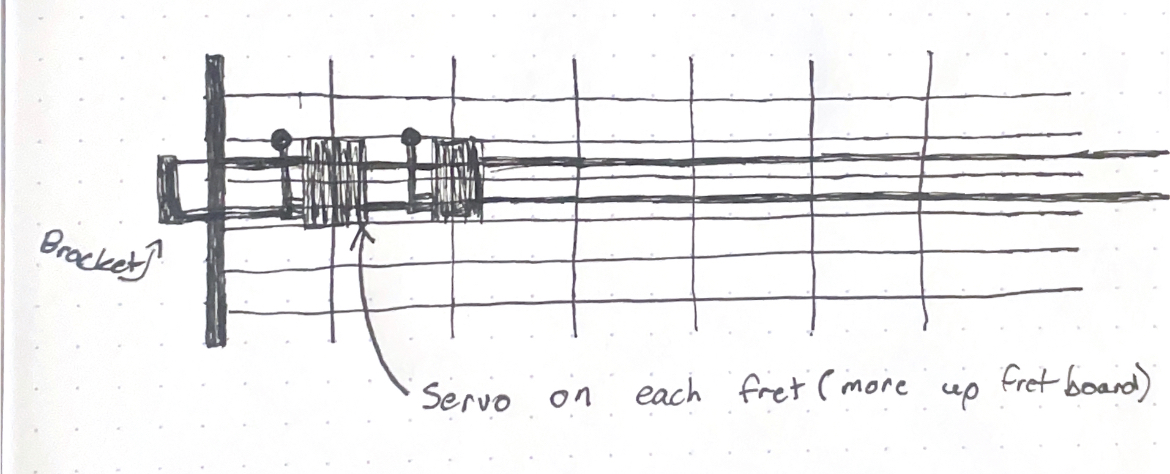

For this project, the mechanical design requirements involved a set of 12 servo motor holders that could be aligned along the guitar neck. Early on, we planned on using a long 3D printed rail along the fretboard that would hold each motor.

Original sketch of the idea

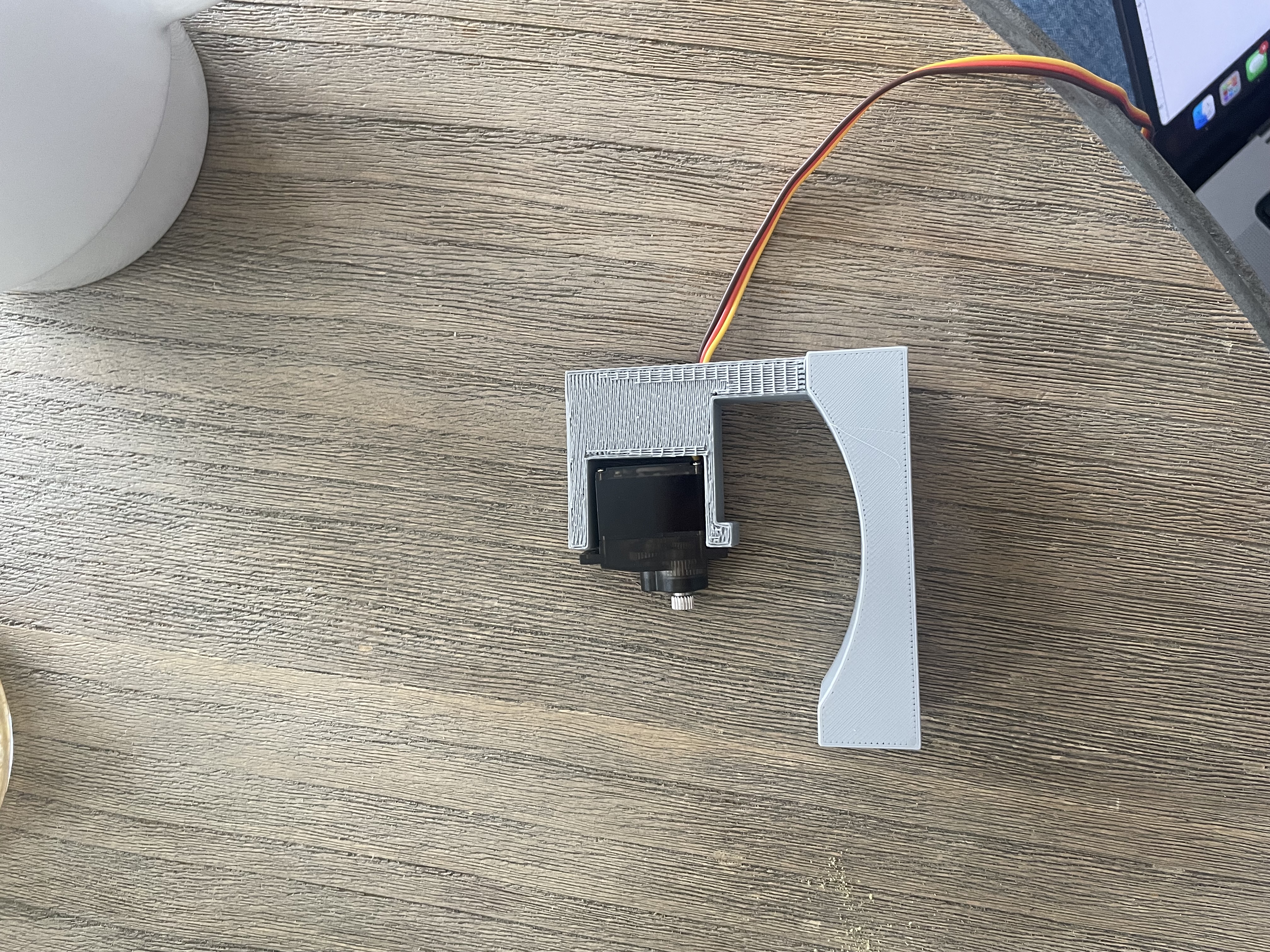

Eventually, we realized that printing holders separately and aligning them individually would provide a better routine for testing. We got measurements from the guitar and used inventor to create the first prototype of the servo holder. The original prototype was just a holder for the neck, used to see if we would have enough clearance and stability to use the base we wanted to. It proved to be effective, so we built out the part in Inventor to have the arm that would hold the servo exactly over the B string. Below is a figure of the finished servo holder.

Original sketch of the idea

These individual holders were also much more reasonable to print given our resources. They placed the servos just above the B string of the guitar, with enough distance to allow the horns to push down into the fretboard. Eventually they were drilled into a larger wooden board to ensure that they were stable when the servos were activated. Now, this set of individual holders and the wooden board could serve as a frame that could hold any guitar.

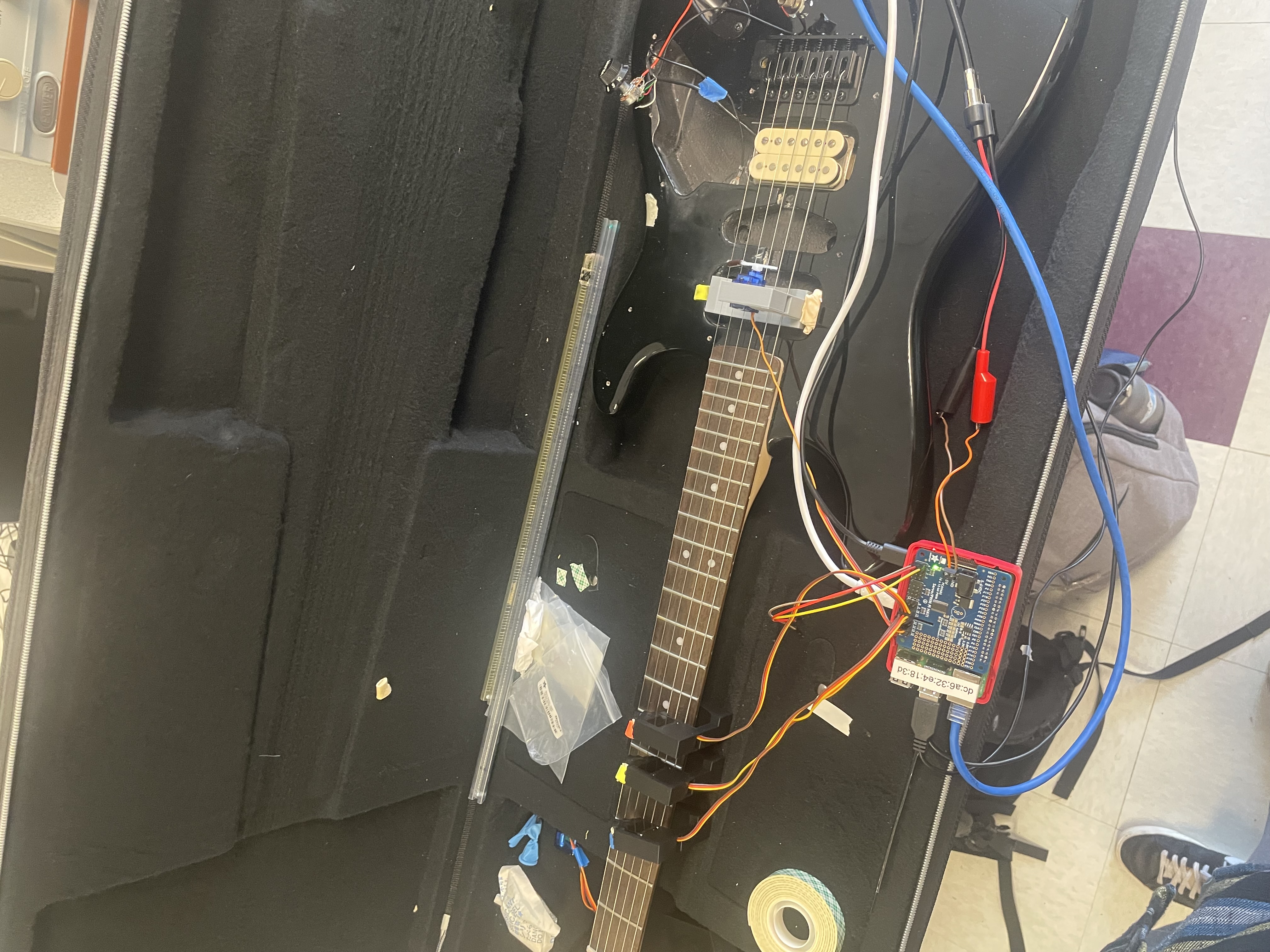

1. View up the neck while the servo board is attached to the guitar

2. Plucking mechanism

Our original idea could not be detached and put on separate guitars, which was a benefit of our later approach. For the set of motors along the neck, we attached erasers to the servo horns to allow for a soft and firm push on the board. We tested with harder, more narrow materials but they didn't press into the board as nicely. In addition, there was one holder for the plucking motor in the soundhole. Rather than an eraser for this motor, we used a piece of tape along the horn to provide an adhesive touch to the B string. This adhesive touch provided sufficient sound when the motor approached, stuck to, and then pulled away from the string. Attempts were made to pluck the string directly with the servo horn, but we received inconsistent sounds when different notes were tested. These inconsistencies largely came from the height of string changing when the strings were pressed, which caused the motor horn to either get caught on the string or completely miss it.

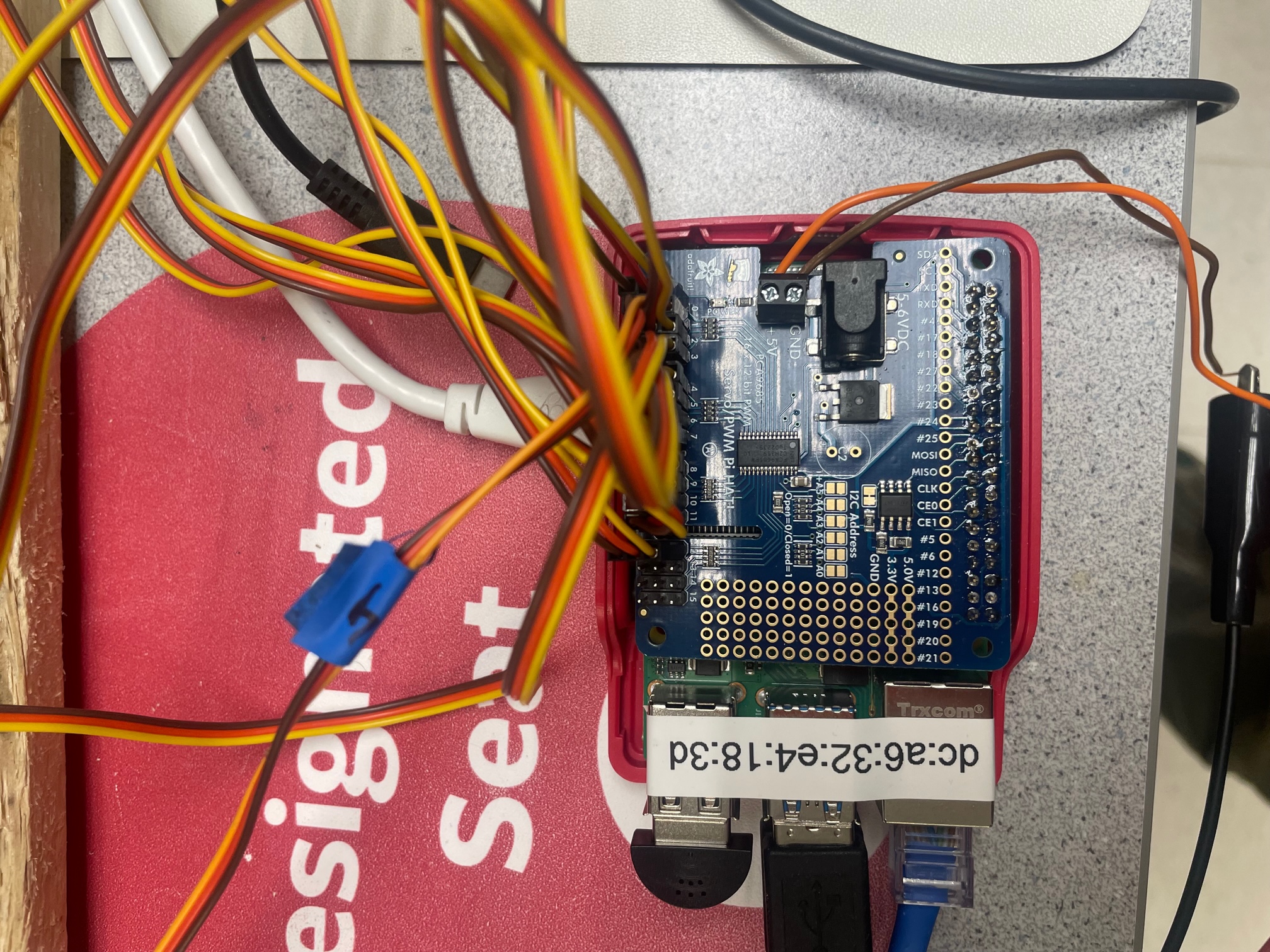

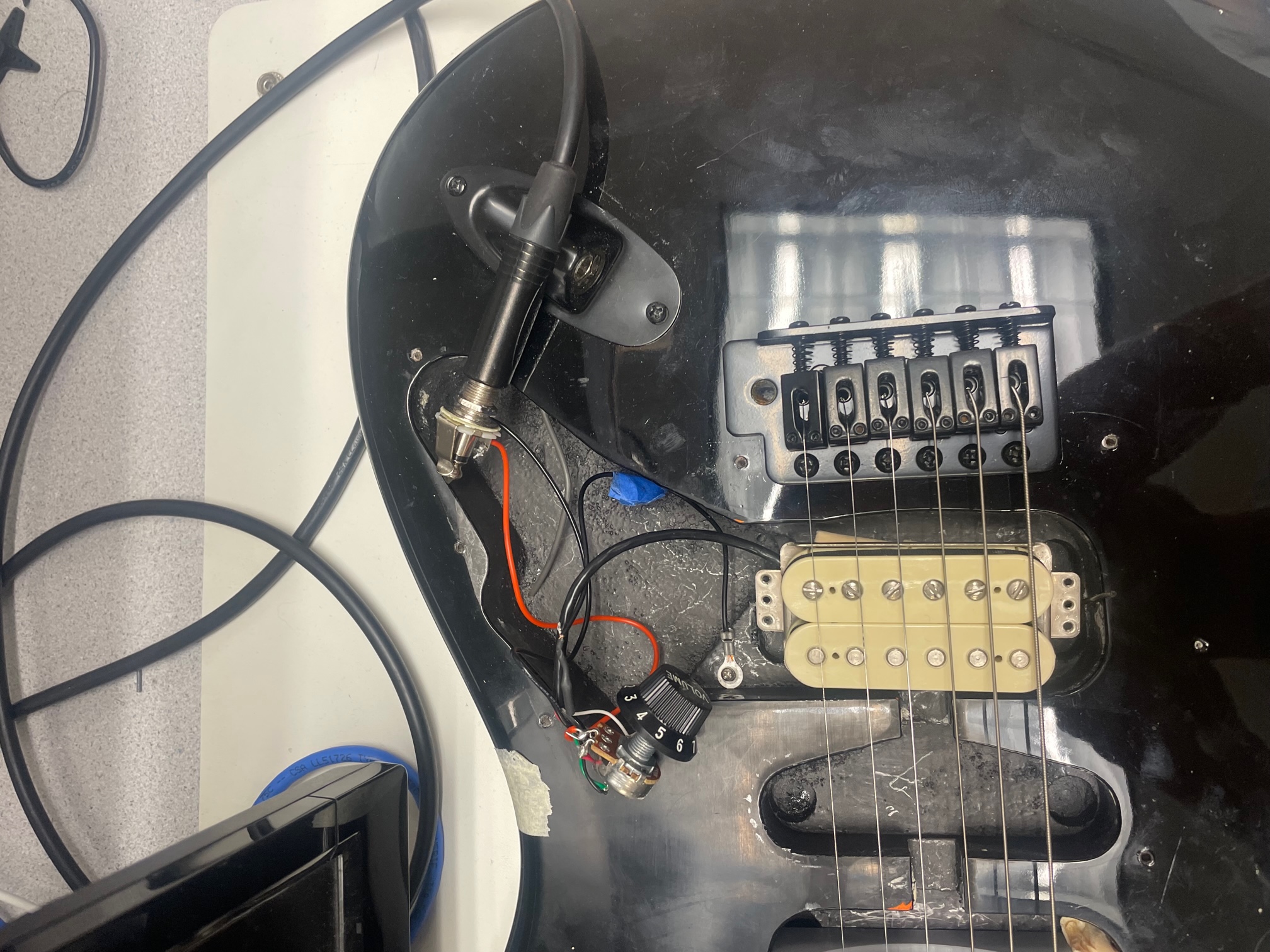

Electrical Design

The backbone of the electrical design for this project came from the Adafruit 16 channel PWM hat. This hat provides 16 PWM channels which were necessary for operating the 13 servos involved with this project. First, we needed to solder a 2x20 stacking header to attach the hat to the pi. Additionally, a 2 pin terminal header and 3x4 motor headers were soldered onto the hat. We used 6V from a DC power supply to power the hat, which then provided 6V to the power pins of the servos. In addition to the power pin, the servos also connected to the hat via ground and control, which sends the desired angle or position of the servo. This operates via pulse control, as a pulse duration is translated to a certain angle for the servo to rotate.

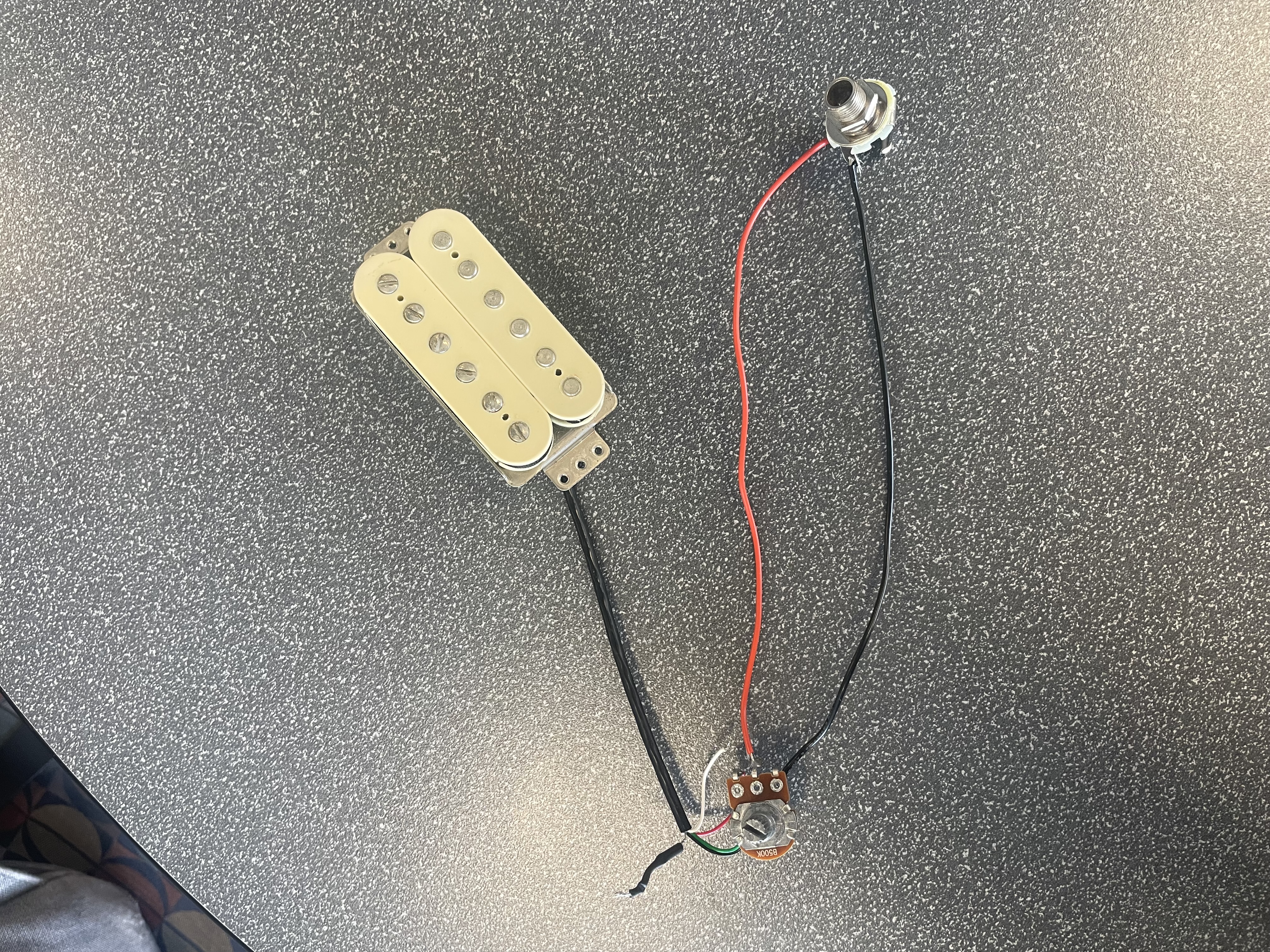

Electronics of the guitar

For better sound quality, we decided to wire in a pickup and potentiometer to the output jack. We used a humbucker pickup, which required tying its bare and green wire to form a ground, while the black wire was the hot wire. The hot wire was soldered to the input lug of the potentiometer, and the ground from the pickup was soldered to the back of the pot. After the second lug of the pickup was soldered to the tip tab of the output jack and the sleeve tab of the output jack was soldered to the back of the pot, our audio circuitry was complete. This provided the use of an amp and volume adjustment, which proved to be very useful when testing our songs.

Software Design

Since GPT was not an option that we could pursue, we decided

to move forward with mechanical and electrical design before

jumping back into the code for servo control. We could keep

the speech to text recognizer portion from the previous

code, but now we needed code to control the actual hardware

of the device.

Once we received the servo HAT, we followed documentation to

set up the Pi so that it would be compatible. This included

steps that had us installing packages and python libraries

so that we could use Python to control the servos. The code

appendix section labelled “Commands” show some of these.

Once they were installed, we could start coding the servo

control.

We started with a test file called test.py in which we

confirmed the servo starting positions. Since the servo

holders were still only attached to the wooden base with

double sided tape at this point, calibrations could be done

on the actual servo wing starting positions and on the

actual position of the servos on the neck. We had a loop

that went through each servo and set it to a starting point,

waited, and went to another angle. This second angle we

needed to determine was the angle at which the string would

be activated. This part of the software was tested

iteratively to find ideal positions and will be expanded on

later in the testing section, along with challenges our

original plucking design faced. However, by the end of our

use of the test.py file, we had the angles we needed so that

the servos could press down on strings quickly enough

without interfering with other servos or notes being played.

The next software step involved figuring out how we would

chain multiple servo controls in the correct pattern.

We began this step by understanding what kind of data

structure we wanted to use to hold the song information that

was needed to play the melody. Since the guitar can only

play one note at a time, we visualized the song as being an

array, with the guitar acting as a loop going through and

playing each “index” of the song. Naturally, each “index” of

the song would need a servo number and a duration. This led

us to use a two-dimensional array, one array that

represented the whole song, with each index being an array

of size 2 that held the servo number in the first index and

the duration of the note in the second index. This way, to

play the song, we would need a function that looped through

each song and “played” each index using the information it

knows exactly how to find (since the index of the note and

duration do not change within each “note” subarray).

For the plucking servo, once we realized we only wanted to

have the adhesive design, we needed to add a function in the

test.py file to test angles. This servo used a much smaller

angle range so that plucking worked as fast as possible. For

this, we gave the servo a range of about 10 degrees, this

way it would travel the distance needed extremely quickly.

Once we had the functions that allowed us to pluck and press

down a note, we could combine them to play a song. We

originally made a file called main.py which held a hardcoded

version of “Smoke on the Water”. Rather than looping through

an array, each note was called directly, and each duration

was hard coded using time.sleep(). The purpose of having

this hardcoded version was to figure out the repeating

patterns that could be broken into separate functions. This

led us to create a function for plucking and a function for

activating the correct string. The plucking function simply

went through the 10 degrees of angle and back again very

quickly. We added a small delay of 0.1 seconds so that the

pluck would always occur after the string was activated.

This prevented accidental mis-plucks. The function for

activating the string took in the note as an argument. This

allowed the function to press down the correct servo, sleep

for the correct duration to allow the note to ring out and

allowed the correct servo to return to its correct position

if needed. Once the song is looped through, each function is

activated for each index in the song, resulting in a

complete song being played.

These functions were all included in the updated main.py

file, where we could manually start any song we had in our

library without having to use the speech recognizer, which

was not functional once we moved it to the Pi due to

microphone issues.

To get the microphone to work, we used some Linux commands

to find which index in the USB devices list was the

microphone, so we could set the input index in the python

speech recognizer function to the correct microphone. We

were also receiving a lot of ALSA configuration errors, but

after investigation, these were not the cause of our issue,

and to clean up the program view, we used the error handler

included in ALSA to replace the warnings and errors with

empty strings. This cleaned up the CLI output a great deal.

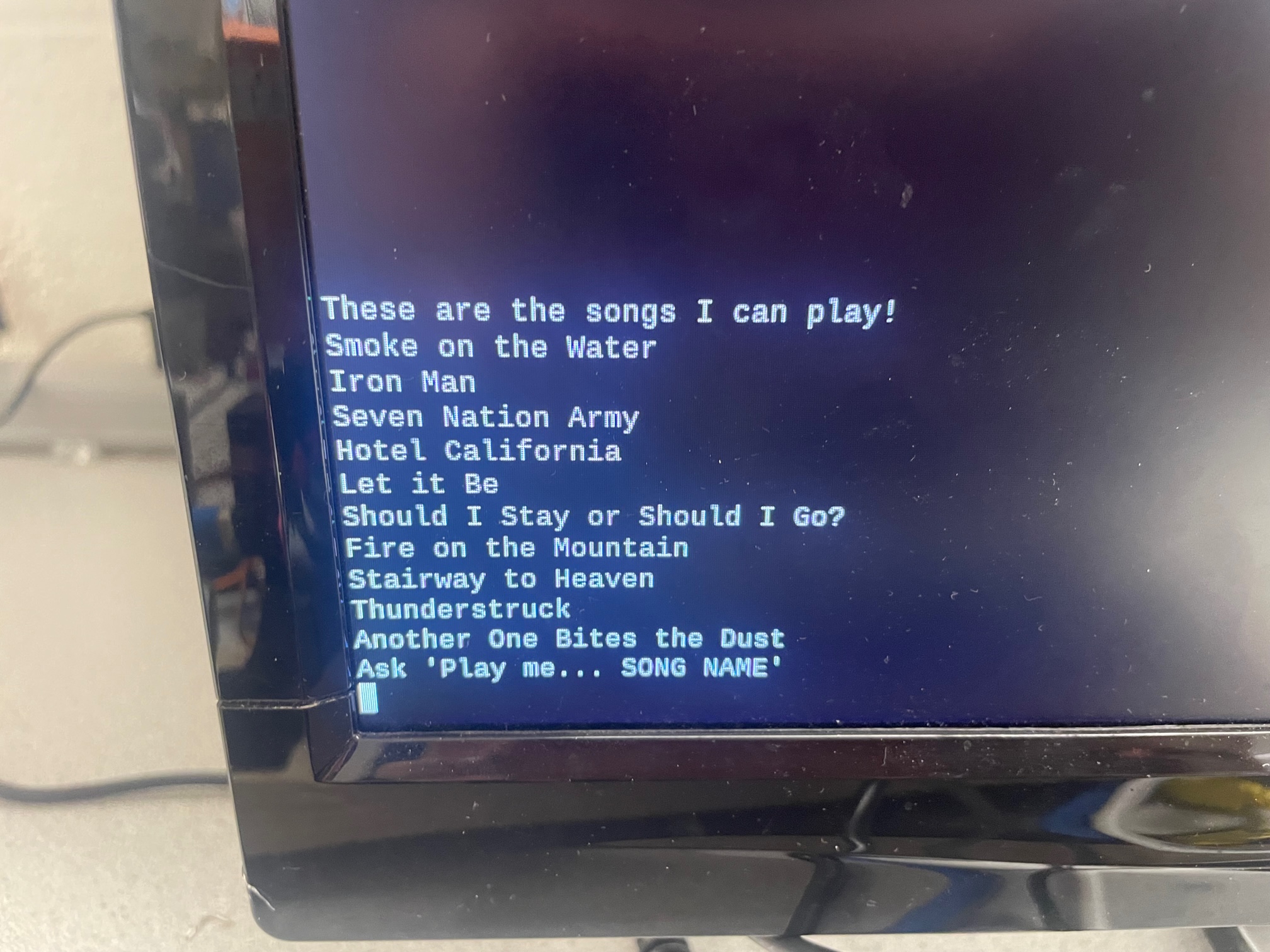

Screenshot of CLI prompt

Once we switched to another USB microphone, all out problems we solved and the recognizer was able to pick up and send the audio to the google speech recognizer API, returning a string of what the user says. This response could be parsed to match the format of our song library. Each song was indexed by its title in all lowercase and no spaces. To match this, we parse the string to remove the “Play me” part, convert the string to lowercase, and replace all spaces with empty strings. This yields just the song title. Using hasattr() and getattr(), we then search the songs module we created and return the correct array or print that the song was not found to the console. The main function then loops through the correct array and plays the song. Once the song is done, it prompts the user to ask for another song. This all was placed in speech.py, which can be viewed in the code appendix.

Testing

Elements of our testing process came from both software and hardware adjustments. Early on, most of the testing consisted of printing ideas for servo holders and then seeing if the servos properly fit into them. This led to testing different sizes in Autodesk Inventor, and eventually we came up with a design that we felt provided stability. Next, we tested making sure that each of our fretting servos could properly push into the board properly. This required adjusting the position of our servos above the board, as well as making sure that the angle in our code wasn't causing the fretting to be too light or heavy. We wrote a test.py script which iterated through all of the motors and took them from an initial horizontal position to the angle that would push the string. This allowed us to observe that each of the motors was able to push down properly. Later on, the majority of our testing came from first inputting the notes and duration for certain songs, and then listening to the result to see if they sounded right. Specifically, one time when testing we were fretting one of the servos while we should've been playing an open string note. Following this, we would tweak the notes and the durations to improve upon our library. The last section of testing mainly focused on our voice input for songs. Shorter songs like 'Iron Man' or 'Let it Be' seemed to be recognized easily by the microphone, while it was harder to recognize longer titles like 'Should I Stay or Should I Go'. Because of this, we attempted to read the song titles slower and more clearly into the microphone, which resulted in the longer titles being recognized better.

Result

The guitar was functional by the end of the project period.

We had a library of 10 songs, of varying difficulty to show

the range of the guitar's functionality. We had the full 12

servos up the guitar neck, allowing for every note in an

octave to be played. We also had it working fast enough so

that each song could be played to sound enough like the

original. The result was also modular, it is extremely easy

to move the system from one guitar to another, as long as

the guitars have the same scale length (since we designed it

around a Fender Stratocaster, it fits most other guitars).

We also were able to acieve the speech to text playability,

making it more user friendly than if the user needed to use

the CLI.

We were not able to accomplish having the guitar be able to

play any song that is given to it, but with more time, this

would have been achievable. This was the only goal that we

did not meet.

Future Work

If we had more time to work on the project, we would have explored a few different things. First, we really wanted to get allowed ANY song to be played. Since the GPT API was not an option, we explored other options to get this functionality. we first explored if there was a way to extract MIDI notes from MuseScore files, but we could not make progress with this method. The next method we would have liked to explore was an FFT API. Sonic API takes an audio file and returns a JSON of the frequencies and durations of the melody notes. Our idea would have been to use PyTube to download a video from YouTube, which would be in mp4 format. This mp4 file. Could then be converted to mp3 using C and snipped into a manageable length before being sent back to Python to the Sonic API. The Sonic API JSON response would then need to be parsed to match the data structure of a song that we use. Given a few more days, I would have been able to attempt this, but our original idea for complete self-playability would still have been an easier and more reliable method of extracting song melodies (if GPT gave real results).

More Photos

1. Servo holders next to each other to prove non interference

2. Guitar being tested in the case prior to base construction

3. Electronics installed in the guitar

Work Distribution

Project group picture

James

jcp327@cornell.edu

Designed electronics and dealt with hardware and electrical interface work.

David

dmr284@cornell.edu

Designed all software and servo holders

Parts List

- Raspberry Pi $35.00

- Adafruit Servo HAT $17.50

- Erasers $0.49

- Guitar Pickup and Electronics - Provided by David

- Guitar - Provided by Professor Skovira

- Wires, Servos, 3D printer filament, misc. - Provided in lab

Total: $52.99

References

Servo HAT DocumentationServo HAT setup guide

Various Help Forums - Stack Overflow

PyAudio Library

Speech Recognition Library

Servo Library

Code Appendix

Source Code

# test.py import time from adafruit_servokit import ServoKit import songs kit = ServoKit(channels=16) kit.servo[0].angle = 120 time.sleep(0.1) kit.servo[0].angle = 165 time.sleep(0.1) kit.servo[0].angle = 120 for x in range(1,13): kit.servo[x].angle = 140 time.sleep(0.1) kit.servo[x].angle = 80 time.sleep(0.1) kit.servo[x].angle = 140

# speech.py import os import importlib import time import speech_recognition as sr import songs from ctypes import * import pyaudio from adafruit_servokit import ServoKit from pynput.keyboard import Key, Listener # ALSA error silencing ERROR_HANDLER_FUNC = CFUNCTYPE(None, c_char_p, c_int, c_char_p, c_int, c_char_p) def py_error_handler(filename, line, function, err, fmt): print("") c_error_handler = ERROR_HANDLER_FUNC(py_error_handler) asound = cdll.LoadLibrary('libasound.so') # Set error handler asound.snd_lib_error_set_handler(c_error_handler) kit = ServoKit(channels=16) file_path = "songs.py" module_name = "songs" songs_module = importlib.import_module("songs") song_names = ["Smoke on the Water", "Iron Man", "Seven Nation Army", "Hotel California", "Let it Be", "Should I Stay or Should I Go?", "Fire on the Mountain", "Stairway to Heaven", "Thunderstruck", "Another One Bites the Dust"] r = sr.Recognizer() speech = sr.Microphone() # Sets servos to start angle for x in range(1,13): kit.servo[x].angle = 140 kit.servo[0].angle = 125 # Taps the string def pluck(): kit.servo[0].angle = 150 time.sleep(0.1) kit.servo[0].angle = 125 # Frets the notes that are 1-12, does nothing if it sees -1 (open string) def activate(note): if note[0] == -1: pluck() time.sleep(note[1]) else: kit.servo[note[0]].angle = 80 time.sleep(0.1) pluck() time.sleep(note[1]) kit.servo[note[0]].angle = 140 # Searches our song library and sends song to activate method if it is found def search(song): if (hasattr(songs_module, song)): for i in getattr(songs_module, song): activate(i) else: print("I don't know that song!") # Script, once a song is done, ask for another song (experimental, doesn't work everytime) while 1: with speech as source: print("These are the songs I can play!") for i in song_names: print(i) print("Ask 'Play me... SONG NAME'") audio = r.adjust_for_ambient_noise(source) audio = r.listen(source) try: recog = r.recognize_google(audio, language = 'en-US') recog = recog.lower().replace(" ","").replace("playme", "") search(recog) except sr.UnknownValueError: print("I don't know that song!") except sr.RequestError as e: print("Could not request results from Google Speech Recognition service; {0}".format(e))

# main.py import time from adafruit_servokit import ServoKit kit = ServoKit(channels=16) '''for i in range(1,13): kit.servo[i].angle = 0''' IRON = [[-1, 1], [3, 1], [3, 0.5], [5, 0.5], [5, 1], [8, 0.25], [7, 0.25], [8, 0.25], [7, 0.25], [8, 0.25], [7, 0.25], [3, 0.5], [3, 0.5], [5, 0.5], [5, 2]] def pluck(): kit.servo[0].angle = 175 time.sleep(0.1) kit.servo[0].angle = 165 def activate(note): # if 0 servos 1-12 are deactivated and only plucking servo activates if note[0] == -1: pluck() time.sleep(note[1]) else: kit.servo[note[0]].angle = 80 time.sleep(0.1) pluck() time.sleep(note[1]) kit.servo[note[0]].angle = 140 for x in IRON: activate(x)

# songs.py ironman = [[-1, 1], [3, 1], [3, 0.5], [5, 0.5], [5, 1], [8, 0.25], [7, 0.25], [8, 0.25], [7, 0.25], [8, 0.25], [7, 0.25], [3, 0.5], [3, 0.5], [5, 0.5], [5, 2]] smokeonthewater = [[-1, 0.5], [3, 0.5], [5, 1], [-1, 0.5], [3, 0.5], [6, 0.25], [5, 1], [-1, 0.5], [3, 0.5], [5, 1], [3, 0.5], [-1, 1]] sevennationarmy = [[5, 1], [5, 0.5], [8, 0.5], [5, 0.5], [3, 0.5], [1, 1], [-1, 1],[5, 1], [5, 0.5], [8, 0.5], [5, 0.5], [3, 0.5], [1, 1], [-1, 1]] hotelcalifornia = [[9, 0.25], [9, 0.25], [9, 0.5], [7, 0.25], [7, 0.25], [7, 0.5], [9, 1], [9 , 0.5], [9, 0.5], [7, 0.25], [7, 0.25], [7, 1], [9, 0.5], [9, 0.25], [7, 0.25], [5, 0.25], [7, 0.25], [9, 0.5], [9, 0.25], [9, 0.25], [9, 0.25], [12, 0.5], [9, 1], [10, 0.25], [10, 0.25], [10, 0.5], [10, 0.25], [10, 0.25], [10, 0.5], [10, 0.5], [12, 0.5], [10,0.5], [10, 0.25], [9, 0.5], [9, 0.25],[9, 0.25],[9, 0.5],[7, 0.25],[7, 0.5], [10, 0.25], [10, 0.25], [10, 0.5], [9, 0.25], [9, 0.5]] letitbe = [[-1, 0.25], [-1, 0.25], [-1, 0.5], [-1, 0.25], [2, 0.5], [5, 0.5], [-1, 0.5], [-1, 0.25], [5, 0.5], [7, 0.5], [9, 0.25], [9, 0.25], [9, 0.5], [7,0.5], [7,0.25], [5,0.25], [5, 0.5], [5, 0.25], [7, 0.25], [9, 0.25], [9, 0.25], [10, 0.5], [9,0.5], [9, 0.25], [7, 0.5], [9, 0.25], [7, 0.25], [7, 0.25], [5, 1]] shouldistayorshouldigo = [[3, 0.25],[3, 0.25],[3, 0.25], [8 ,0.25], [8 ,0.25], [8 ,0.25], [8 ,0.25], [7 , 2], [3, 0.25],[3, 0.25],[3, 0.25], [8 ,0.25], [8 ,0.25], [8 ,0.25], [8 ,0.25], [7 ,2], [7, 0.25], [7, 0.25], [8, 0.25], [8, 0.25], [10, 0.25], [8, 0.25], [10,2], [3, 0.25],[3, 0.25],[3, 0.25], [8 ,0.25], [8 ,0.25], [8 ,0.25], [8 ,0.25], [7 , 2]] fireonthemountain = [[5, 0.5],[5, 0.5],[6, 0.5],[8, 1],[6, 0.5],[5, 0.5],[6, 0.5],[6, 0.5],[5, 0.5],[3, 1], [5, 0.5],[5, 0.5],[6, 0.5],[8, 1],[6, 0.5],[5, 0.5],[6, 0.5],[6, 0.5],[5, 0.5],[3, 1], [5, 0.5], [5, 1], [5,0.25], [4,0.25], [3, 0.5], [3, 1], [5, 0.5], [5, 1], [5,0.25], [4,0.25], [3, 0.5], [3, 1]] stairwaytoheaven = [[5,0.25],[7,0.25], [8, 0.5], [7, 0.25], [5, 0.25], [7, 0.5], [5, 0.25], [7, 0.25], [8, 0.5], [10, 0.25], [8, 0.25],[7, 0.25], [5, 0.5], [8,0.25], [10,0.25], [12, 0.5], [10, 0.25], [8,0.25],[7,0.25], [5, 0.5], [3, 0.25], [3, 0.25], [5, 0.25], [5 ,0.25]] thunderstruck = [[-1, 0.25], [4, 0.25], [-1, 0.25], [7, 0.25], [-1, 0.25], [4, 0.25], [-1, 0.25], [7, 0.25], [-1, 0.25], [4, 0.25], [-1, 0.25], [7, 0.25], [-1, 0.25], [4, 0.25], [-1, 0.25], [7, 0.25], [-1, 0.25], [5, 0.25], [-1, 0.25], [8, 0.25], [-1, 0.25], [5, 0.25], [-1, 0.25], [8, 0.25], [-1, 0.25], [5, 0.25], [-1, 0.25], [8, 0.25], [-1, 0.25], [5, 0.25], [-1, 0.25], [8, 0.25]] anotheronebitesthedust = [[-1, 0.5],[-1, 0.5],[-1, 1], [-1, 0.25], [-1, 0.25], [3, 0.25], [-1, 0.25], [5, 0.5], [-1, 0.5],[-1, 0.5],[-1, 1], [-1, 0.25], [-1, 0.25], [3, 0.4], [-1, 0.25], [5, 0.5] ]

# usbIndex.py import pyaudio p = pyaudio.PyAudio() print("Start") for ii in range(p.get_device_count()): print(p.get_device_info_by_index(ii).get('name'))

Commands

sudo apt-get install python-smbus sudo apt-get install i2c-tools sudo pip3 install adafruit-circuitpython-servokit pip3 install pyaudio pip install SpeechRecognition